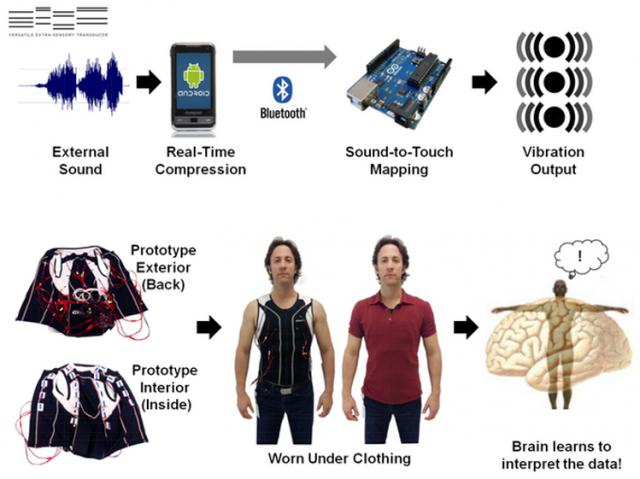

The VEST, or the Versatile Extra-Sensory Transducer, is an unconventional hearing device that allows the hearing-impaired to feel and understand speech through a series of vibrations.

David Eagleman, a neuroscientist at Houston’s Baylor College of Medicine; Scott Novich, a PhD student who works in Eagleman’s lab; and their team of six electrical and computer engineering students from Rice University tested a prototype on Jonathan, a 37-year-old deaf man who, after five days of wearing the VEST, was able to understand and write the exact words that were being said to him.

Eagleman told the audience at TED 2015 in Vancouver:

“Jonathan is not doing this consciously, because the patterns are too complicated, but his brain is starting to unlock the pattern that allows it to figure out what the data mean, and our expectation is that, after wearing this for about three months, he will have a direct perceptual experience of hearing in the same way that when a blind person passes a finger over braille, the meaning comes directly off the page without any conscious intervention at all.

“This technology has the potential to be a game-changer, because the only other solution for deafness is a cochlear implant, and that requires an invasive surgery. And this can be built for 40 times cheaper than a cochlear implant, which opens up this technology globally, even for the poorest countries.”

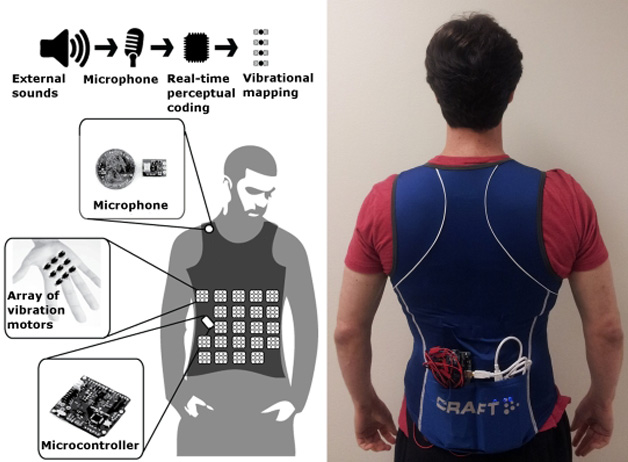

Driven by the principle of sensory substitution, the low-cost, noninvasive VEST collects and converts sounds from a mobile app into tactile vibration patterns on the wearer’s torso. The brain learns to interpret individual VEST vibrations as particular sounds, allowing the deaf people to navigate complex soundscapes and hear a direct translation of sound waves with the same effort as regular hearing. He told WIRED:

“We’re taking advantage of skin, this incredible computational material, and using it to pass on data. We pick up sounds and do all the computation to break it into 40 streams of information. The VEST has vibratory motors all over it and we convert the data into patterns of vibration, which we tap as a dynamic moving pattern on to the torso.

“Each motor represents some frequency band: low, high and everything in between. If I’m talking to you, my voice is represented by a sweeping pattern of touch across your skin. By establishing correlations with the outside world – as in, every time I hit this piano key I feel this pattern, or every time someone says my name it feels like that – the brain eventually figures out how to translate the patterns into an understanding of the auditory world.”

Does that mean the VEST is just translating the sounds into a code? Eagleman pointed out to The Atlantic that the patterns felt aren’t a “language” to be interpreted like Braille; the VEST responds to all ambient noises and sounds.

“The pattern of vibrations that you’re feeling [while wearing the VEST] represents the frequencies that are present in the sound. What that means is what you’re feeling is not code for a letter or a word—it’s not like Morse code—but you’re actually feeling a representation of the sound.”

Eagleman and his team are not only working on refining the VEST for deployment to the hearing-impaired, they are also experimenting with consumer-grade VESTs aimed at expanding everyday human perception. Using devices like his VEST, he says, human beings in the future may even be able to pick up on information that is already flowing through their bodies.

He thinks the VEST can unlock robotics by helping humans feel what robots feel. Pilots controlling a quadcopter or drone could “sense” the robot’s movements from the ground. Astronauts could “feel” the health of the International Space Station. People could have 360-degree vision or “see” in infrared or ultraviolet, not by using their eyes, but by using Bluetooth or Wi-Fi. In fact, with using the VEST, you could even “feel” the invisible states of your own health, like your blood sugar and the state of your microbiome.

“As we move into the future, we’re going to increasingly be able to choose our own peripheral devices. We no longer have to wait for Mother Nature’s sensory gifts on her timescales, but instead, like any good parent, she’s given us the tools that we need to go out and define our own trajectory. So the question now is, how do you want to go out and experience your universe?”

This article (Neuroscientist Invents VEST that Gives Humans a Sixth Sense) is a free and open source. You have permission to republish this article under a Creative Commons license with attribution to the author and AnonHQ.com.

http://anonhq.com/neuroscientist-invents-vest-gives-humans-sixth-sense/

Comments

consciousness disclaimer?

I wonder what evidence Mr Eagleman has when he states this is not conscious understanding of vibration. For without consciousness, how then are exact words being processed. Just because science is not able to validate consciousness does not mean that it is a no thing. I also feel that this device would allow the user to discern truth in what is being said by the very same vibration used in word/sentence cognition. On top of that, I would love to see testing of music translated to words. That would be the tunes with out words. Just the music. What does the single voice of an orchestra say? The difference between 432 Hz and 440 Hz would likely be significant. Please tune to 432 Hz or only get part of the picture.